- AI, Esq.

- Posts

- AI, Esq. Newsletter Kickoff!

AI, Esq. Newsletter Kickoff!

My very first newsletter on AI for lawyers

Welcome to the AI, Esq. newsletter. I’ve been coming across all sorts of interesting things while exploring generative artificial intelligence (“genAI”) as a lawyer. My intention is to send you a brief monthly update collecting some of those things. I’ll also include practical tips and training for you to use AI in support of your law practice.

This is an experiment. Not only is this my first AI newsletter, this is my first time writing a newsletter, period. So I would really appreciate any feedback, especially critical feedback. And if you know anyone who would be interested in this, please invite them to subscribe!

So, with those preliminaries out of the way, let’s get to it.

Notable finds

When I ran across this timeline of notable releases of AI, I was blown away by how many innovative genAI products came out last year. This pace has continued in January—from meaningful updates by Google and Microsoft to their products, to exciting developments in open source large language models; it appears we are on track for another big year for this tech.

The author of this short post, Ethan Mollick, reliably creates thoughtful, practical perspectives on using genAI at work. This post collects answers to some questions that he gets over and over again. It’s a great quick read packed with useful information.

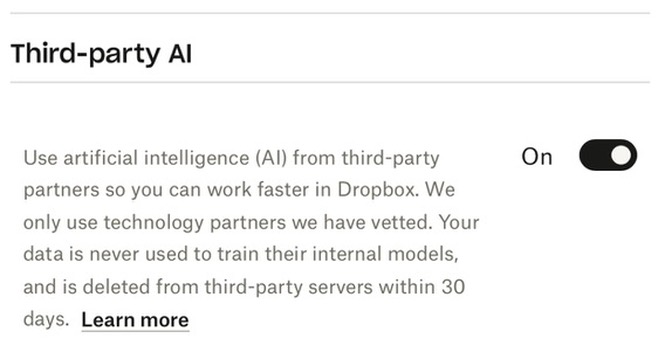

Recently, I’ve been very curious about data security in generative AI. I imagine a lot of you have the same questions I do about whether and how we can safely use AI technologies when confidential data is involved.

Simon Willison is a software developer who has done some critical work in this area (if you have heard of a “prompt injection” attack, that’s his term). I’m always interested in his perspective. This short piece outlines his view of a “trust crisis” impacting AI and data security.

Also on the topic of data protection, this Swiss law firm’s article gives a detailed breakdown of the data protection of various AI tools that might be used in the workplace. It also gives some guidance on how to implement AI tools at work for those concerned about data security. This is part of a larger ongoing series, and if the quality of this article is any indication, I’m sure I’ll be checking out the other entries!

Tips, tools, and tutorials

This video is a presentation from the CEO of Section, a business education startup. I’ve watched a number of genAI introduction videos, and this is probably my favorite. It covers how to use ChatGPT, as well as how to think about where it might be useful in your workflow.

I would recommend skipping 23 minutes into the video for the instructional content. The preceding material is really just an overview of AI that may sound somewhat familiar, as well as this some of the speaker's thoughts on where AI might be headed. While that is interesting, I think that the remainder of the video is far more useful.

One other note about this video is that this guy struck me as quite blasé about the data privacy concerns associated with ChatGPT. He explains his attitude in terms of his particular enterprise being a small startup without significant data security concerns. But as a lawyer dealing with proprietary data, I’ve got a very different perspective. So let me stress, I do not consider it safe or good practice to provide any client-confidential data to ChatGPT.

It’s rare that I have time to watch a lengthy video. So here is OpenAI’s written guide to prompting ChatGPT. It covers some of the same ground as the video above, and I’ve found it a handy reference. OpenAI is the developer of ChatGPT—so this is coming straight from the horse’s mouth.

All right y’all, that’s a wrap—See you next month!